The planets and other bodies in our solar system orbit the sun along elliptical trajectories. For a given body, its elliptical orbit has the sun located at one of the two foci. For most of the planets, the eccentricity of their orbits is low, so they are approximately circular, and other bodies like comets have high eccentricity. This still begs the question: how are stable orbits asymmetric if the gravitational field from the sun is spherically symmetric? The reason is the periodic exchange of energy between kinetic and potential. You see this phenomenon occur in a pendulum or a spring. As these objects oscillate, energy is changing form while the total remains constant (neglecting friction). In a perfectly circular orbit, however, there is no exchange, since kinetic and potential energy each remain constant.

The physics of this can be worked out using Lagrangian mechanics. The first step is writing a Lagrangian describing an orbiting body. This is composed of the kinetic and potential energy of the body. The kinetic energy is dependent on its velocity. The potential energy is dependent on its distance from the sun. These can be put together to give us the Lagrangian :

where is the velocity of the planet,

is the mass of the planet, and

is the potential energy from the sun’s gravity, which is dependent on radial distance

. The explicit form of

is not relevant yet.

It is easier to work in polar coordinates rather than Cartesian coordinates for this problem. We can simplify things by noting that the planet is not leaving the plane of its orbit. So the coordinate is zero. Then the

and

coordinates can be converted to polar coordinates

and

.

Take the derivative with respect to time to get the velocity vector, and then square it for the kinetic energy term.

We are then left with a Lagrangian in polar coordinates:

There are two degrees of freedom here: radial and angular. To get the equations of motion, we need to evaluate the Euler-Lagrange equation for each degree of freedom.

For angular:

since there is no explicit dependence on angle .

This variable is a constant with respect to time, and is the angular momentum of the orbiting body.

We can then express the equation of angular velocity in terms of :

For the radial degree of freedom:

Equate these together and you get the equation of motion:

Plug in the equation for angular velocity, and the equation of motion only depends on :

This differential equation can then be solved to get the trajectory of an orbiting body.

To solve this differential equation, do a change of variables: distance to inverse distance

such that:

We then have:

Because there is no explicit dependence on angle in the Lagrangian, time dependence can be converted to angular dependence.

The equation of motion then becomes:

We now need the explicit form of the potential energy from the sun’s gravity to continue solving this. The equation is:

Where is Newton’s constant and

is the mass of the sun.

The equation of motion can then be simplified further:

Solving this is relatively easy with a variable substitution:

The general solution is then:

However, without loss of generality, this can be expressed with just one trigonometric function.

Note that:

So now we have the general solution:

The constant can be shown to be equal to

.

where is the eccentricity of the trajectory, not Euler’s number.

Put back in terms of distance, we have a function for a trajectory in terms of angle and radial distance:

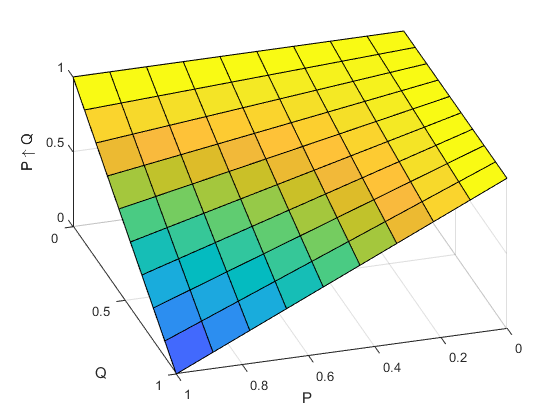

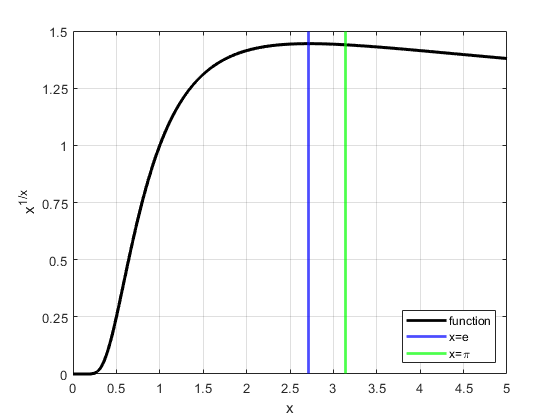

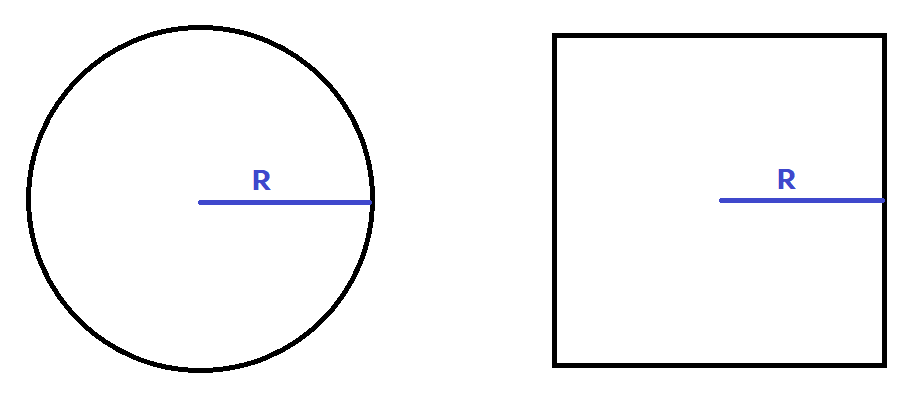

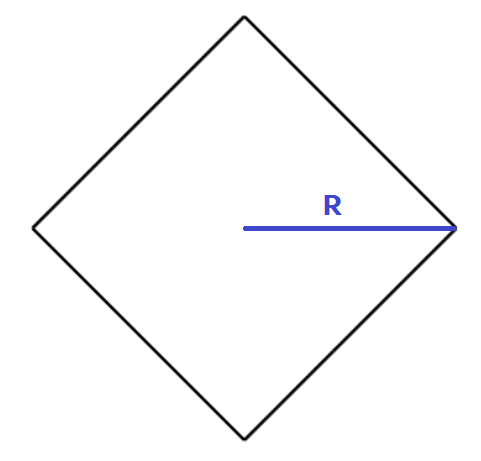

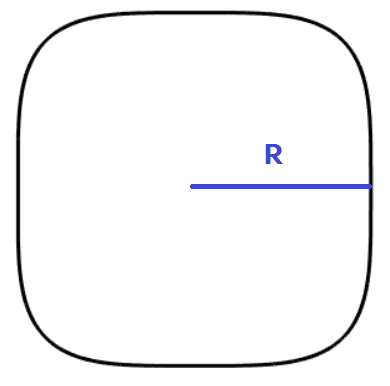

Note that different values of eccentricity give different trajectory shapes:

Circle

Ellipse

Parabola

Hyperbola

Each of these is a conic section: a 2D cross-section of a 3D cone.

For simplicity, we can choose an axis where such that

.

So then we have:

We can verify that this trajectory is a conic section by transforming it into Cartesian coordinates to look something like this:

Here the transformation is done step by step:

The last step is then putting the trajectory equation into the form shown above.

Where the variables here and relate to the variables ,

and

:

So the trajectory can be written in terms of and

:

Or back in polar coordinates:

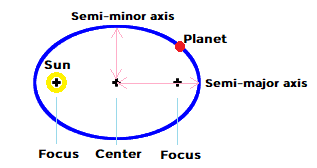

A bound orbit with has a min and max distance from the sun, a semi-major axis, and a semi-minor axis.

semi-major axis

semi-minor axis

perihelion (minimum distance to sun)

aphelion (maximum distance to sun)

Using this is actually how you solve for the constant in the general trajectory formula.

For , we can say the planet is nearest to the sun, so

, and for

, we can say it is at its farthest from the sun, so

.

This gives a system of two equations that can be solved:

From here, we can arrive at Kepler’s law of equal areas. This is the observation that an orbiting body sweeps out the same area between it and the sun for any given duration of time.

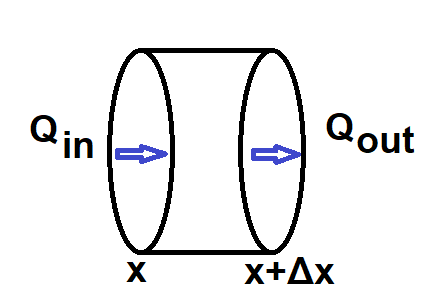

Start with an infinitesimal piece of swept area:

Divide by an infinitesimal piece of time:

And there you have it, the rate of the area swept over time is constant.

We can express this constant in terms of the semi-major axis, and arrive at another of Kepler’s laws relating the orbital period to the semi-major axis.

The full orbit area and period are then related by:

The area of the ellipse in terms of the semi-major axis is:

So we then have the law relating the orbital period with the semi-major axis:

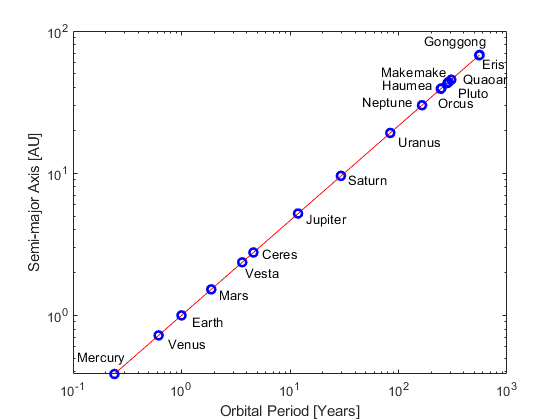

Take the log of both sides, and we can see how planets and other objects fall on the curve.

The orbital period and semi-major axis of the planets were gathered from Wikipedia. However, if you were to measure these quantities yourself, you could use regression to check Kepler’s law and measure the mass of the sun.

From the numbers given in Wikipedia, we compute the ratio between exponents on the period and semi-major axis to be and the mass of the sun to be

.